From our previous work, you probably know that we at Rupert believe the data expert’s biggest time sink is not creating content (reports, dashboards, and so on), but rather maintaining it and supporting business stakeholders with using it.

This is why, at the heart of Rupert, lies a unique search engine. The engine crawls through data assets from across the organization, indexing them and documenting them. When a business stakeholder submits a request to the data expert, the engine surfaces the most appropriate assets along with any additional context that will help them extract the insights they need.

Today, we’re releasing a dataset that we collected, cleaned, and analyzed, as part of our research for our contextual search engine. This dataset is the first of its kind as it addresses challenges that current datasets do not. We’re releasing it with the hope it will be used to make data experts’ data analyses even more accessible to business stakeholders.

Introduction

The vast majority of data today still resides in relational databases that are only accessible to a small subset of people in the organization. This is because relational databases need complex, technical SQL queries, written to fetch data within the databases, build reports, and perform analytics.

However, if we could enable people to directly interact with large-scale enterprise databases using natural language text or voice, it would liberate data experts from the redundant work of servicing their business stakeholders, while cutting time to insight. From there, the Text-to-SQL mission was born.

The goal of Text-to-SQL is to take a question or utterance from a user, understand its intent and the data they wish to see, and convert this into an executable SQL query run over the right database. This would make data easily accessible to analysts and non-technical users alike, saving time and effort for data experts to work on more complex tasks. In addition, organizations would reduce costs in terms of time-to-insight as well as realizing the full potential of their data and analytics teams.

In research settings, Text-to-SQL falls under Semantic Parsing - the task of converting natural language to a logical form. As part of this research, machine-learning models are being trained using datasets that feature pairs of SQL queries and their equivalent natural language utterances. Most available semantic parsing datasets that consist of these pairs -- notably Spider and WikiSQL -- were collected solely for the purpose of training and evaluation of natural language understanding systems, As a result, they do not contain any of the richness, variety, and unpredictability related to natural-occurring utterances posed by curious, data-oriented humans.

Our New Dataset

Today, we release the Stack Exchange Data Explorer (SEDE) dataset, a dataset with 12,023 pairs of utterances and SQL queries collected from real usage on Stack Exchange website. These pairs contain a variety of real-world challenges which have rarely been reflected in other semantic parsing datasets.

Stack Exchange is an online question & answers community, with over 3 million questions asked in the past year alone. This created a huge repository of questions, answers, and other interactions on them. Stack Exchange released this collection in a database form that can be queried by users for research purposes. They also added the Data Explorer, a tool that allows any user to query this database with T-SQL (a SQL variant) queries to answer any question they might have. The database schema is spread across 29 tables and 211 columns. Common natural language utterance topics are published posts, comments, votes, tags, awards, etc.

Any query that users run in the Data Explorer is logged, and users are able to save the queries with a title and description for future use by the public. All of these logs are available online, as Stack Exchange released these queries, together with their title, description, and other meta-data. Today, we publish our clean version of this log, which contains 12,023 samples. These samples and logs perfectly illustrate the challenges, variations, and hidden assumptions humans make when they write a SQL query in order to solve a logical question, something we believe no other dataset has shown in the past.

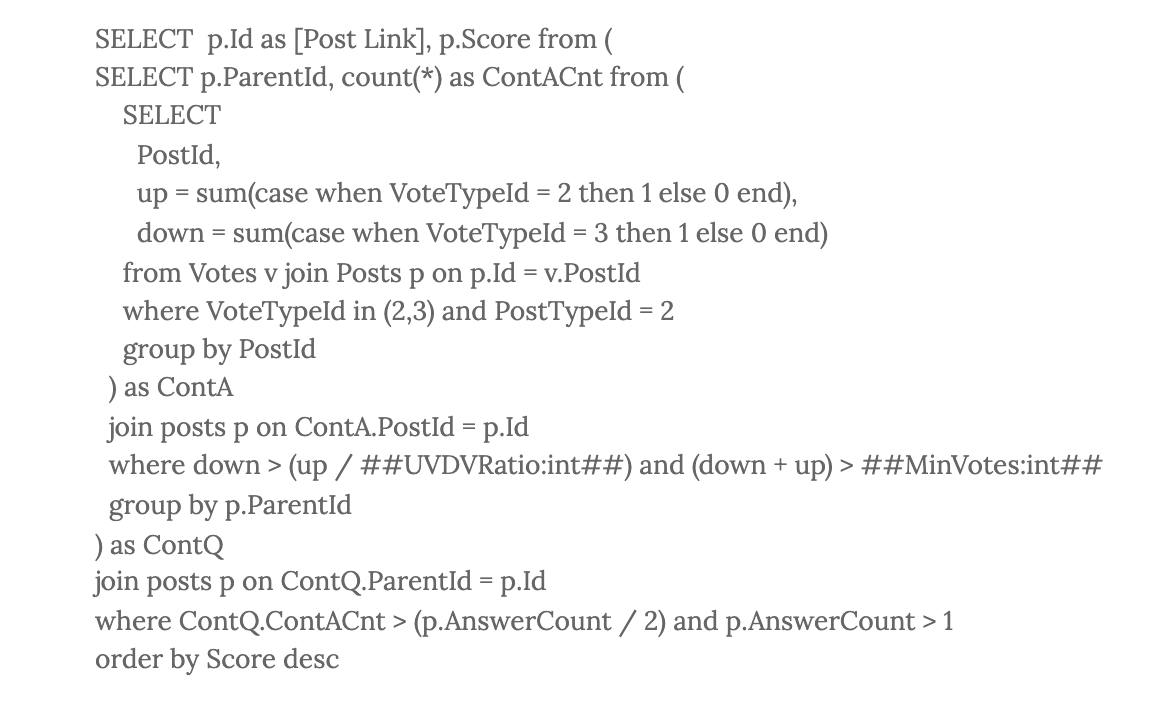

An example from the SEDE dataset:

Title: Questions which attract bad answers

Description: Search for posts which have attracted significantly more controversial or bad answers than good ones

SQL:

The example above shows a SQL query from SEDE, with its title and description. It introduces several challenges that have not commonly been addressed in the currently available datasets: comparison between different subsets, complex usage of 2 nested sub-queries, and an under-specified question, which does not state what “significantly more” means (solved in this case with an input parameter (##UVDVRation##).

Real-World Challenges in SEDE

Utterance-Query Alignment in Datasets- One rising issue with other datasets is that their utterances are often aligned to their SQL query counterparts in an unnatural way, such that the columns and the required computations are explicitly mentioned. In contrast, natural utterances often do not explicitly mention these, since the schema of the database is not necessarily known to the asking user. For example, the question from Spider "titles of films that include 'Deleted Scenes' in their special feature section" might have been more naturally phrased as "films with deleted scenes" in a real-world setting.

Well-Specified Utterances for SQL Queries - The utterances in academic datasets are mostly well-specified, whereas in contrast, natural utterances are often under-specified or ambiguous; they could be interpreted in different ways and in turn be mapped to entirely different SQL queries. Consider the example above: the definition of “bad answers” is not well-defined, and in fact could be subjective. Since under-specified utterances, by definition, can not always be answered correctly, any human or machine attempting to answer such a question would have to either make an assumption on the requirement (usually based on previously seen examples) or ask follow-up questions in an interactive setting.

In addition to the above challenges, SEDE also introduces the use of parameters in the SQL query, dates manipulation, textual manipulation, the use of the CASE clause, numerical computations, and more.

Text-to-SQL Evaluation

Text-to-SQL models are usually evaluated in two different forms: Execution Accuracy and Logical Forms Accuracy. In short Execution Accuracy is measured by executing both the predicted (the query outputted by the text-to-SQL model) and gold (ground-truth annotated SQL query in the dataset) queries against a dataset, and considering the query to be correct if the two output results are the same (or similar enough). On the other hand, Logical Form Accuracy is measured by simply performing a textual comparison between the predicted and gold queries. In our paper, we show why using any of these metrics is difficult when it comes to complex queries (such as the ones in SEDE). Instead, we propose a more loose metric for evaluation of models, called Partial Component Match (PCM). We do this by parsing both the predicted query and the gold query, comparing different parts of the two parsed queries (for example, SELECT, WHERE, etc.) and aggregating the scores into a single metric (refer to our paper for more details!).

We conducted extensive experiments over SEDE with state-of-the-art Text-to-SQL model (we used the T5 model, a seq2seq model based on the well-known Transformer architecture), and we found on our experiments that while on the Spider dataset results were above 85% PCM, those models performed poorly on SEDE, barely achieving 50% PCM.

Use our SEDE Dataset!

We hope that the release of this dataset, along with its unique and challenging properties, will pave a path for future work on the generalization of Text-to-SQL models beyond academic setups and instead, make data all over the world accessible, for anyone.

We encourage you to download our dataset and use it to improve your Text-to-SQL models for your organization!

Our dataset and code to run all experiments and metrics are available at https://github.com/hirupert/sede. We also encourage you to read our paper.

Acknowledgment

We thank Kevin Montrose and the rest of the Stack Exchange team for providing the raw query log.

Written by: Ben Bogin, Moshe Hazoom, & Vibhor Malik